Every major technological advancement prompts new ethical concerns or shines a fresh light on existing ones. Artificial intelligence is no different in that regard. As the property/casualty insurance industry taps the speed and efficiency generative AI offers and navigates the practical complexities of the AI toolset, ethical considerations must remain in the foreground.

Traditional AI systems recognize patterns in data to make predictions. Generative AI goes beyond predicting – it generates new data as its primary output. As a result, it can support strategy and decision making through conversational, back-and-forth “prompting” using natural language, rather than complicated, time-consuming coding.

A recently published report by Triple-I and SAS, a global leader in data and AI, discusses how insurers are uniquely positioned to advance the conversation for ethical AI – “not just for their own businesses, but for all businesses; not just in a single country, but worldwide.”

AI inevitably will influence the insurance sector, whether through the types of perils covered or by influencing how insurance functions like underwriting, pricing, policy administration, and claims processing and payment are carried out. By shaping an ethical approach to implementing AI tools, insurers can better balance risk with innovation for their own businesses, as well as for their customers.

Conversely, failure to help guide AI’s evolution could leave insurers — and their clients — at a disadvantage. Without proactive engagement, insurers will likely find themselves adapting to practices that might not fully consider the specific needs of their industry or their clients. Further, if AI is regulated without insurers’ input, those regulations could fail to account for the complexity of insurance – leading to guidelines that are less effective or equitable.

“When it comes to artificial intelligence, insurers must work alongside regulators to build trust,” said Matthew McHatten, president and CEO of MMG Insurance, in a webinar introducing the report. “Carriers can add valuable context that guides the regulatory conversation while emphasizing the value AI can bring to our policyholders.”

During the webinar, Peter Miller, president and CEO of The Institutes, noted that generative AI already is helping insurers “move from repairing and replacing after a loss occurs to predicting and preventing losses from ever happening in the first place,” as well as enabling efficiencies across the risk-management and insurance value chain.

Jennifer Kyung, chief underwriting officer for USAA, discussed several use cases involving AI, including analyzing aerial images to identify exposures for her company’s members. If a potential condition concern is identified, she said, “We can trigger an inspection or we can reach out to those members and have a conversation around mitigation.”

USAA also uses AI to transcribe customer calls and “identify themes that help us improve the quality of our service.” Future use cases Kyung discussed include using AI to analyze claim files and other large swaths of unstructured data to improve cost efficiency and customer experience.

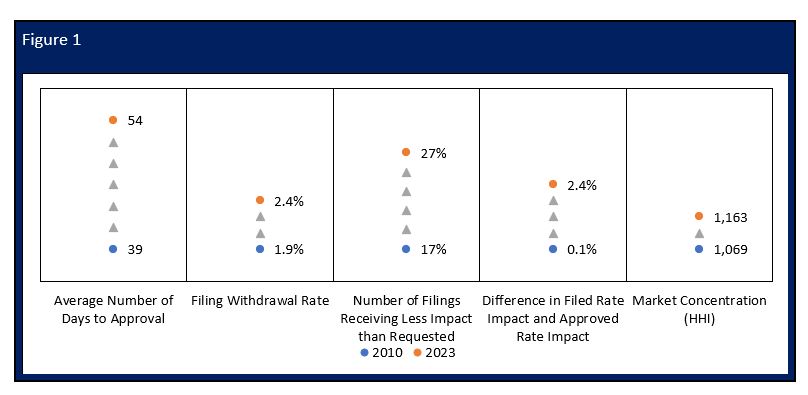

Mike Fitzgerald, advisory industry consultant for SAS, compared the risks associated with generative AI to the insurance industry’s early experience with predictive models in the early 2000s. Predictive models and insurance credit scores are two innovations that have benefited policyholders but have not always been well understood by consumers and regulators. Such misunderstandings have led to pushback against these underwriting and pricing tools that more accurately match risk with price.

Fitzgerald advised insurers to “look back at the implementation of predictive models and how we could have done that differently.”

When it comes to AI-specific perils, Iris Devriese, underwriting and AI liability lead for Munich Re, said, “AI insurance and underwriting of AI risk is at the point in the market where cyber insurance was 25 years ago. At first, cyber policies were tailored to very specific loss scenarios… You could really see cyber insurance picking up once there was a spike of losses from cyber incidents. Once that happened, cyber was addressed in a more systematic way.”

Devriese said lawsuits related to AI are currently “in the infancy stage. We’ve all heard of IP-related lawsuits popping up and there’ve been a few regulatory agencies – especially here in the U.S. – who’ve spoken out very loudly about bias and discrimination in the use of AI models.”

She noted that AI regulations have recently been introduced in Europe.

“This will very much spur the market to form guidelines and adopt responsible AI initiatives,” Devriese said.

The Triple-I/SAS report recommends that insurers lead by example by developing their own detailed plans to deliver ethical AI in their own operations. This will position them as trusted experts to help lead the wider business and regulatory community in the implementation of ethical AI. The report includes a framework for implementing an ethical AI approach.

LEARN MORE AT JOINT INDUSTRY FORUM

Three key contributors to the project – Pete Miller, Matthew McHatten, and Jennifer Kyung — will share their insights on AI, climate resilience, and more at Triple-I’s Joint Industry Forum in Miami on Nov. 19-20.